Tuesday, April 29, 2008

Introduction to Background Jobs

A SAP background job defines the program or group of programs which are going to be executed by the background work processes.

Components of the Background Jobs

A background job has the following components:

¨ Job name. Defines the name assigned to the job.

¨ Job class. Indicates the type of background processing priority assigned to the job.

¨ Target host. It's the SAP instance where the job will be executed.

¨ Job steps. A job step defines the program (either ABAP or external) which will be executed.

¨ Start time and repeat interval. Define when the job will be started and whether it should

be periodically executed.

¨ Job print lists. These lists specify the printing parameters for the job output.

¨ Job log. The logs for the jobs include log information about the job execution such as starting

time or any other information coded in the programs.

¨ Job spool recipient list. A recipient list can be used for specifying one or more recipients

who will receive automatically the spool list generated by the job.

Starting Background Processing

Starting background processing means to reach any type of job definition screen in order to specify the needed data for the background execution of programs. There are several ways to start the background processing system:

¨ From the initial job definition screen

¨ From the ABAP reporting service screen. From any menu, you can select System Services

Reporting, then enter the report name. The reporting screen includes the option for

background execution by selecting Program Background from the menu.

¨ From the ABAP workbench editor. On the editor initial screen, enter the name of the program

in the input field and select Program Execute Background from the menu.

¨ Sometimes working with business applications, especially navigating through the many

information system and reporting functions, the system allows for background execution.

The last three methods mentioned are virtually the same thing, though the system can display different selection screens. With these three methods of starting background processing, after indicating background execution, the R/3 system displays the Execute report in background screen. The job will have automatically included the report as the first job step, whereas the user has to enter additional job definition fields, such as the job class, target host, start time, and so on.

Saturday, April 26, 2008

Developer Traces

These traces can be useful also for administrators, since some of the files sometimes contain the explicit reason and explanation for system errors.

To display the list of developer traces, from the monitoring menu, select Traces -> Developer traces. The system will display a list with the title Error log files.These files are actually

operating system files located under the work and profile directories. To display the contents of any file, justdouble click on them, or select Log file Display from the menu.

The names of all the developer trace files start with the character string dev. The following list shows the developer trace file names.

Component File name

Dispatcher dev_disp

Work processes dev_w

Dynpro (screen processor) dev_dy

Roll dev_ro

Paging dev_pg

Database interface dev_db

ABAP processor dev_ab

Enqueue process dev_eq

System logging dev_lg

Message server dev_ms

SAPGUI dev_st

APPC−server dev_appc

Transport program calls dev_tp

Remote function calls dev_rfc

You can set the level of tracing to be included in the developer trace files by adding the options to the start command lines of any SAP program. The start commands are normally located in the startup profile of the SAP instances. Available options are

¨ TRACE=0. No trace is written to files.

¨ TRACE=1. Write error messages in the trace file.

¨ TRACE=2. Write the full trace.

¨ TRACE=3. Write the full trace including data blocks.

You can set any of the above trace options for the whole instance, by entering the rdisp/TRACE=

****************************************************************

Tuesday, April 22, 2008

Copying Tables Entries Between Two Different Systems

For example, assume that, after a client copy between two SAP systems, a table content could not be completely copied to the target system (import phase) because the table reached the maximum number of extents in the underlying database. You can avoid having to perform a whole import again by transporting a single table with R3trans.

The general procedure to do this is as follows:

1. Create the control file for the export

2. Run the control file in the source system

3. Check the export log

4. Create the import control file in the target system

5. Run the import control file in the target system

6. Check the import log and the data in the target system

Detailed Procedure

After verifying the client copy log, due to some storage problems in table MOFF, this table could not be completely copied. To avoid having to perform the whole client copy process, just the entries on table MOFF from the source client will be copied to the table MOFF on the target client 010 in the target system T12.

1) In the source system (C12), create a control file, for example, expmoff.ctrl with the following content:

export

client=002

select * from moff where mandt = '002'

2) Run the R3trans utility with the previous control file: R3trans −w expmoff.log −u 18 expmoff.ctrl. While the −w flag indicates the log file, the −u flag sets unconditional modes for the transport. In the export phase, unconditional mode 1 indicates the system to ignore the wrong status of transport requests. Mode 8 allows direct selection of tables when the default modes are not allowed.

By default, the previous command generates the data file trans.dat in the directory where the export has been executed. If both source and target systems share the transport directory, it won't be necessary to copy the trans.dat file. Otherwise, you must use available tools for file transfer, for example, ftp.

3) Check the export log file, expmoff.log, and verify it did not contain any errors.

4) Once in the target system, create the import control file, for example, impmoff.ctrl, with the following content:

import

client=010

5) Then execute it with the R3trans tool: R3trans −w impmoff.log −u 248 impmoff.ctrl

By default it uses the data file trans.dat generated by the previous command file.

The unconditional modes used in the import phase are: 2 for enabling the overwriting of the original, 4 which ignores that the transport request was intended for a different target system, and 8 which allows for importing tables which are restricted by their table types.

If you use the default options for the import, you do not need a control file. The import can be

performed directly with R3trans −i

6) Check the import log file, impmoff.log, to check that the import runs fine without errors. You can also log on to the target client, 010, in the target system and look up the table contents with standard dictionary utilities, such as the data browser (transaction code SE16).

Sunday, April 20, 2008

Monitoring the System Work Processes (SM50)

Notice that you can only display the processes of your local system.

The SAP work processes correspond to operating system processes. You could also monitor these processes from the operating system. In fact, the field PID matches exactly to the Process Id of the underlying operating system. With a UNIX command such as ps −eaf \ grep dw, you can see the SAP processes. On Windows NT systems you can see the processes from the Task Manager as disp+work.

The SAP runtime directory (/usr/sap/

The columns in the work process overview screen show the following information:

No. Refers to the internal ID number of a process. It is useful for identifying messages in the system log belonging to a work process.

¨

Ty. It's the type of work process. On this screen, you can find the following types:

¨DIA. Dialog work processes, in charge of executing interactive dialog steps

¨UPD. Update work process for executing U1 update components). In charge of critical updates on the database ¨

¨UP2. Update work process for executing U2 update components. In charge of performing

secondary updates on the database¨

¨ ENQ. Enqueue work process in charge of setting and releasing lock objects

¨BTC. Background work processes, in charge of executing background jobs

¨SPO. Spool work process in charge of the SAP spooling system (formatting and printing)

PID. Process identification number of the work process which matches the PID of the operating system process.

¨

Status. Shows the current state of the work process. Observe that the process overview screen always offers a snapshot of the processes. So, upon pressing the Refresh icon, it can change. Possible process status are

¨ Running. The process is executing a system request.

¨ Waiting. The process is idle and waiting for any system request.

¨ Hold. The process is held by a single user. Although a Hold state can be normal, having too

many processes in hold state affects the system performance.

¨ Killed or Complet. The process has been aborted with the Restart option set to No.

¨ Stopped. Due to system or application error, the process has stopped and could not restart

automatically.

When the process overview displays many processes with status waiting, it means that the system load is low. When this happens, the SAP dispatcher will try to allocate the same work process for a user, and thus avoid rolling in and out the user contexts.

Reason. This column displays a mnemonic code displaying the reason for a work process with a hold status. Some of the reasons can be activities performed by the lock mechanism, the update process, debugging, CPIC tasks, or RFC requests. If the reason columns display Priv, it means that a work process has been reserved for a private use in a particular ABAP transaction requiring a large paging storage. This mechanism prevents the rolling in and out of the user contexts and the ABAP paging.

¨

Start. This column has either the values Yes or No and indicates whether the work process will be automatically restarted in the event of an abnormal termination. Normally, this field is set to Yes butyou can switch the restart status by selecting the function Restart after error from the Process menu.

Err. Contains the number of times a work process has terminated abnormally.

Sem. This column can contain the number of the semaphore on which a work process is waiting.

Normally, this field is empty. However if you notice that a semaphore number appears often, it might indicate some performance problems in the system and might need some parameter adjustments.

CPU. Contains in number of seconds the CPU time consumed by a work process.

Time. Indicates the elapsed execution time used by the work process for the dialog step that it is currently processing. This column usually contains a small figure. When it displays a large figure, it might indicate that the process is being held in a debugging session.

Program. This column contains the ABAP program that is currently executing.

Client. Indicates the SAP system client where the session is being executed.

User. Contains the user ID whose request is being processed.

Action. Under this field the system shows the actions being performed by the running program. These actions are the same as those recorded by the system performance monitor which is activated by default with the profile parameter stat / level. This column might display actions such as sequential read, insert, or direct read.

Table. This column displays the name of the tables being accessed, if any.

There is more detailed information available from the process overview screen which you can display by selecting the work processes and then clicking on the Detail info button on the application toolbar. With this option, in addition to all the information from the overview screen, the system displays statistical information about the work process such as the memory, development environment, and database usage.

You can terminate a work process with or without generating a core dump file in the operating systems, which can be used for debugging. Before canceling a work process, you should select the menu function Restart after error, either with options Yes or No, to indicate whether the process which is to be canceled should restart or not, after being manually terminated.

To display a trace, choose the work process and, from the menu, select Process -> Trace -> Display file.

By selecting the work process and clicking on the Delete session button on the application toolbar you can also delete a user session and release the work process. However, you should avoid performing this function from this overview, since the work processes can be attending several users, and you could unintentionally affect other users' work. Rather, you should delete a user session from the User overview screen(SM04)

Friday, April 18, 2008

Introduction to Tables & Indexces

¨ Transparent tables

¨ Pool tables

¨ Cluster tables

Transparent tables do exist with the same structure both in the dictionary as well as in the underlying database system, exactly with the same records and field descriptions.

The other two types cannot be directly queried in the underlying database, since those structures are only logically known at the SAP level. These structures can be found in the database but not in a directly readable form.

Pooled Tables, Table Pools, Cluster Tables, and Table Clusters

Pool and cluster tables are logical tables. Physically, these logical tables are arranged as records of transparent tables. The pool and cluster tables are grouped together in other tables, which are of the transparent type. The tables that group together pool tables are known as table pools, or just pools; similarly, table clusters, or just clusters, are the tables which group cluster tables.

* SAP recommends that tables of pool or cluster type be used exclusively for control information such as program parameters, documentation, and so on. Transaction and application data should be stored in transparent tables.

Generating the Table in the Database

Once a table is defined in the ABAP dictionary, it has to be created physically in the underlying database. The generation is performed automatically when activating the table using the ABAP dictionary functions.

There are, however, certain restrictions: only transparent tables are automatically generated. Pool− and cluster−type tables are not automatically generated since, from the database point of view, these type of SAP tables do not match database tables.

This implies that non-transparent tables cannot have secondary indexes, since secondary indexes, just as tables, must also be generated at the database level.

Indexes

The purpose of defining secondary indexes for tables (only for tables of type transparent) is to enhance the access time when performing select operations on the table. The secondary indexes allow access to the table information on which they are defined, using a different order than the one for the primary key. Physically, they behave like a record table subset, ordered by different criteria.

The secondary indexes do actually occupy physical space in the database; therefore, every table update implies a real updating of the secondary indexes. So, if defining secondary indexes helps the search performance, they, however, slow down the updating process.

ABAP dictionary object types

The ABAP dictionary can handle eight different object types

Table. As previously explained, a table is a two−dimensional data matrix. A table can contain zero or many rows, corresponding to the predefined table structure (entity type). This is, at the same time, a complex structure, which can be made up of one or several fields (attributes). Every row that makes up the database table has the same structure and properties. The fields that make up the structure of the table records, as well as its attributes, permitted value range, and so on, are set when defining the table.

Structures. The object structure refers to the definition of a compound object that does not have any content. It's like a table or a view, but it never has entries: it's only a structure. These types of objects are used in programs for defining data structures or for defining data in the interfaces from the module pools and the screens. The basic difference between structures and tables (or views) is that the structure does not exist at the underlying database system level; however, both tables and views do exist in the database. Structures only exist as definitions in the dictionary. As a result, structures do not need to be activated.

Views. From the relational database point of view, a view is a virtual table. It contains data which are really stored in other tables. The contents for the views are dynamically generated when called from a program or a transaction. In the simplest form, the fields within a view all come from a single table, where you could select all of them or just a few (projections), or select some of the fields of the table according to specific criteria (selection) using the Select option. Other, more complex forms of views allow for selecting fields from different tables which are related by foreign keys (join operation). You can also use the three methods combined for creating a view: projection, selection, and join.

Data elements. They are a semantic definition of the properties and type for a table field. It's an intermediate object between the object type domain and the table field. A field in R/3 is always associated with a data element, which at the same time is always related to a domain. Some properties of table fields in the SAP environment can only be defined at the data element level, for example, the text elements associated with the fields. As an example, suppose there is a table with the fields manager_name and employee_name. These fields could have the same technical description−they could be strings with 30 characters−char (30). They would be, however, different at the semantic level: the meaning is different, since they relate to names of people at different levels in company. This difference is only reflected with the text elements associated to the data elements, but it is also useful for the online help which can be associated with the fields.

Domains. They are the formal definition of the data type from a technical and syntactical point of view. They set attributes including data type, length, possible value ranges, and so on.

Lock objects. These types of object are used for locking and synchronizing the access to database records in tables. This mechanism is used to enforce data integrity, that is, two users cannot update the same data at the same time. The lock object definition includes the table or tables which can be concurrently accessed, as well as the key fields for the access. A lock object request is not only used to lock a single record from a table but also to lock a complete logical object. Defining a lock object in the data dictionary automatically generates two ABAP function modules which allows for locking (ENQUEUE) and unlocking (DEQUEUE) the object.

Search helps. These are objects that can be used for assigning input help (using the F4 function key) to fields. These types of objects are new with release 4.0 and are meant to replace the functionality of matchcodes. The main difference between search helps and matchcodes is that search helps are physically built based on transparent tables. There are two types of search helps: collective and elementary.

Type group. These objects contain ABAP type definitions. From version 3.0 onward, developers can define their own data types based on standard R/3 data types.

Development classes are logical groups of development objects which are related, normally deployed for the same application module, related reports, and so on. Development classes are particularly important for team development, the transport system, and use within the repository browser, application hierarchy, and so forth.

Saturday, April 12, 2008

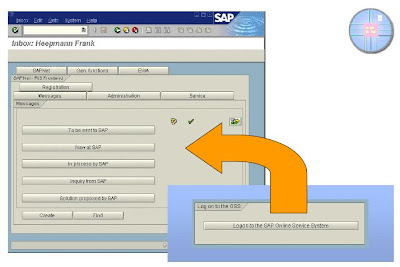

Using SAPNet

SAPNet offers the user two frontends:

SAPNet offers the user two frontends:SAPNet - Web Frontend is an Internet interface

SAPNet - R/3 Frontend is an R/3 interface

For both frontends, you need an S-user ID. You should obtain this ID as early as possible by fax from SAP.

SAPNet - R/3 Frontend was formerly known as the Online Service System (OSS).

SAPNet - Web Frontend

To access SAPNet - Web Frontend:

From the SAP public homepage http://www.sap.com/ , enter your S-user ID

Directly, use URL http://sapnet.sap.com/

You can navigate in SAPNet using aliases: type the alias directly after the URL.

To access SAPNet - R/3 Frontend, you need to have installed a SAP GUI and maintained certain

There are two ways to log on:

From within R/3, choose System → Service → SAP Services (transaction OSS1).

From SAP Logon.

TMS : Transports Between Systems : Setup & Maintenance Phases

The (initial) setup phase, where no relevant client-specific data is yet in the target R/3 System

The (subsequent) maintenance phase, where a complete dataset exists in the target R/3 System

Transports Between Systems: Setup Phase

· For the setup phase, R/3 provides the following tools:

The Workbench Organizer and the Transport System

Remote client copy and client transport

· The setup phase involves the following steps:

1. Replicate the R/3 Repository. Transport any customer-specific software (such as ABAP programs) to the new R/3 System using change requests, which are executed by the Workbench Organizer and the Transport System.

2. Transport the Customizing settings to the new system. To transport the Customizing data, use the remote client copy or client transport tools.

· Client transport and change requests use the transport directory to reach their target system. Remote client copy transports data using an RFC connection.

· Remote client copy and client transport are not designed for transferring large-scale production clients, or for database migration.

· All the SAP Systems in the system landscape should be in one transport domain.

Transports Between Systems: Maintenance Phase

<

<· As soon as the data has been written to the R/3 Systems, and needs to be retained, remote client copy and client transport can no longer be used to transport changes to Customizing.

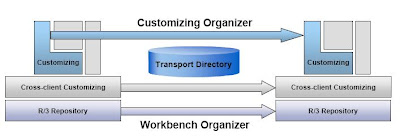

· Therefore, distribute further changes using the Customizing Organizer (CO). To use the CO, you must first activate automatic recording of changes for the client.

· As with the Workbench Organizer (WBO), the CO records the objects that have been changed. In the case of Customizing, these changes are primarily table settings.

· During the maintenance phase, changes to the R/3 Repository and to cross-client Customizing continue to be transported using the WBO.

· The main difference between CO & WBO is that the CO is used primarily to manage changes to Customizing. However, the CO can also be used to manage Workbench requests. To keep Customizing and Workbench development logically separate, changes to the R/3 Repository should only be managed using the WBO.

· Both Customizing and the WBO allow the recorded objects to be transported to the local transport directory. From there, the data is imported into the target system, using the Transport System.

Thursday, April 10, 2008

TMS : Transports in a Single R/3 System

After the R/3 Systems and their clients have been created, these systems and clients must be customized and filled with data.

First, we consider the distribution of these adjustments and data within an R/3 System.

The first step in adjusting an R/3 System to a customer's requirements is the Customizing process.Customizing is done in a separate client, from which the settings can be distributed across other clients in the R/3 System.

During this distribution process, you must differentiate between:

Target clients with existing data that must be retained

Empty target clients that are not yet filled with data

For target clients with existing data, distribute the Customizing settings using transaction SCC1. This transaction allows single table entries to be transported without deleting the target client completely.

For empty target clients that need to be newly configured, the Customizing settings should be distributed using a local client copy. A local client copy can transport all of the Customizing settings, possibly even including all application data. Because of the data dependencies mentioned above, the target client is

normally deleted before data is copied to it. Therefore, a local client copy cannot be used to merge the data of several different clients; rather, its purpose is to newly configure empty target clients.

TMS : Setting up an R/3 Transport Landscape

2. Configure the transport domain controller and define the domain.

3. Configuration of the transport program (tp) is done automatically and

must not be done at OS level.

4. In the TMS:

- Include all remaining systems in the domain

- Define the transport routes

- Define QA approval procedure

5. Set the system change options according to the role of the R/3 System.

6. Create clients and set the client change options for the production system,

development system, and so on.

The steps for setting up a transport landscape are summarized below.

To set up an R/3 transport landscape:

Make a transport directory available to every R/3 System that will be ransporting.

The TMS allows a local transport directory for every R/3 System.

To configure the TMS, define the transport domain controller.

In the TMS:

- Include all remaining systems in the domain.

- Define the transport routes.

Set the system change options according to the role of the R/3 System.

Create clients in every R/3 System and set the client change options (production

system, development system, and so on).

Tuesday, April 8, 2008

TMS: Administering R/3 Systems

To create a transport domain, call the TMS from Client 000. To automatically define the transport domain controller as as the current system choose Tools → Administration → Transports → TransportManagement System.

As soon as the domain has been created, additional systems can apply for acceptance by the domain. For security reasons, these systems are not accepted until they have been authorized by the transport domain controller.

The TMS System Overview displays the various system statuses:

Waiting for acceptance by the domain

Active

System locked for the TMS

System not accepted

System deleted

Technically, TMS can connect systems with different R/3 release statuses. However, SAP does not support any transports between such systems.

Because of its central importance, the transport domain controller should run on an R/3 System with a high availability.

Configuring Transport Routes

To configure the transport routes between systems in the domain, use the hierarchical list editor and graphical editor provided by the TMS. Define these settings in the transport domain controller.

The transport routes can be either consolidation or delivery routes.

For consolidation routes, a transport layer is used, for example to define a

route between the development and the quality assurance system.

Delivery routes connect systems, for example the quality assurance and the

production system. They do not use transport layers.

Create transport routes in the graphical editor using drag and drop.

After the transport routes have been configured in the transport domain

controller, they can be distributed across all systems in the domain.

These setting must be activated in all the systems in the domain. This can also be

done centrally by the transport domain controller.

To enable previous configurations to be reused, you can create versions in the TMS.

Monday, April 7, 2008

Transport Management System (TMS): Basics

Management System.

The Transport Management System (TMS) allows transports between different R/3 Systems to be administered centrally.

The TMS classifies R/3 Systems into transport groups and transport domains:

A transport group consists of all R/3 Systems that can access a common transport directory.

A transport domain consists of one or more transport groups.

To enable transports to be administered centrally, it must be possible to administer all systems in the transport domain from a designated R/3 System, called the transport domain controller.

For the administration of transports, the following information is stored centrally:

The systems participating in the transports

Their transport routes

This configuration data does not need to be set manually in every R/3 System. Instead, using RFC, the transport domain controller distributes the data to all participating R/3 Systems.

Data Structure and Data Types of an R/3 System

The R/3 System consists of various data types.

Certain types of data are only accessible from a particular client. Such data types include business application data (documents, material master records, and so on) as well as most Customizing settings.

These settings:

Define the customer's organizational structures (distribution channels, company codes, and so on)

Adjust the parameters of R/3 transactions to fit customer-specific business operations

Client-specific data types are closely interdependent. Thus, when application data is entered, the system checks whether the data matches the client's Customizing settings. If there are inconsistencies, the application data is rejected. Therefore, application data usually only makes business sense in its specific Customizing environment.

In addition to client-specific data, R/3 can have other settings that, once defined, are valid for all clients.

This data includes:

Cross-client Customizing, such as printer settings

The R/3 Repository, which contains all objects in the R/3 Dictionary (tables, data elements, and domains), as well as all ABAP programs, menus, CUAs, and so on

In the case of cross-client settings, an ABAP report that was originally developed in a certain client may be immediately usable in another client.

Corresponding to the various data types in the R/3 System, there are various types of changes and adjustments to data.

The R/3 System is delivered in standard form and must be adjusted to the customer's requirements during the implementation phase. This procedure is called Customizing. As shown in the graphic,Customizing includes both client-specific and cross-client Customizing data. An R/3 upgrade may require a limited amount of additional Customizing.

Unlike Customizing, enhancements or adjustments to the R/3 Repository are not required to operate an R/3 System.

To adapt the R/3 Repository to a customer's requirements, the customer can develop in-house software.

In addition, customer enhancements can be added to the R/3 Repository. In this case, customer defined objects are used to complement the SAP delivery standard. The precise locations where enhancements can be inserted are specified by SAP.

Finally, R/3 objects such as reports and table definitions can be modified directly. In this case, the R/3 Repository delivered by SAP is not merely enhanced; it is changed. During the next R/3 upgrade,these modifications may therefore need to be adjusted before being incorporated into the new Repository. The adjustment can be a time-consuming process.

Customizing settings must be transported between clients.

Changes to the R/3 Repository must be transported between R/3 Systems.

Friday, April 4, 2008

DB Administration: Periodic Checks

The Database System Check Monitor (transaction DB16) displays an overview of all the main database functions and statuses.

The Backup Log Monitor (transaction DB12) displays information about the status of database and offline redo log file backups.

The Tables and Indexes Monitor (transaction DB02) displays an overview of the storage behavior of the database and the status of the database objects.

The Database Performance Monitor (transaction ST04) displays an overview of the load and configuration of the database management system and the database.

The DBA Operations Monitor (transaction DB24) provides a central point from which you can access all database monitoring operations, such as database backup logs, optimizer statistic runs, and Oracle database checks.

Check these monitors daily to ensure that:

The cost-based optimizer does not use obsolete information

Too many extents are not created

Freespace problems do not occur

-->The cost-based optimizer uses an SQL statement to determine the most effective strategy for retrieving or manipulating database data. The access strategy used depends on the information in the:

Queried table (or tables, for a view or join)

Fields specified in the WHERE clause of the SQL statement

Indexes defined for the tables queried

The cost-based optimizer computes the cost of several strategies for accessing the tables, and chooses the one that requires the smallest amount of data accesses. To calculate the cost of a strategy, the optimizer requires statistical information about the tables and indexes of the database, such as:

Number of table or index rows and number of blocks allocated for the object

Number of distinct values in each column of the table

The statistical information for a table or index is stored in the data dictionary of the database. To collect the statistical information, use the Oracle SQL command ANALYZE table.

Note that the ANALYZE command is expensive.

Table and index sizes and value distributions can change. If the current number of rows of a table differs greatly from the values determined by the last analyze table run, the optimizer may choose an ineffective strategy and the database access time becomes longer.

You should refresh the optimizer statistics at least once a week.

-->Only up-to-date statistical information can ensure that the Oracle cost-based optimizer chooses the optimal access path. However, gathering optimizer statistics is expensive and reduces system performance.

We recommend the following two-phase strategy:

In the first phase, the SAP tools determine which tables require a statistical update. The command sapdba -checkopt PSAP% determines which database objects contain obsolete statistics, and modifies the control table DBSTATC accordingly.

In the second phase, the statistics of the tables marked TODO in the control table DBSTATC are refreshed using command sapdba -analyze DBSTATCO.

This two-phase strategy ensures:

Up-to-date statistics for all tables

Optimal analysis runtime

Updating Oracle Optimizer Statistics

*******************************

Use the DBA Planning Calendar (transaction DB13) to schedule the two phases of the strategy to run consecutively. The analysis commands are performed by program SAPDBA.

Check optimizer statistics determines the tables requiring an analyze table run.

To determine which database objects require a statistical update, choose the keyword PSAP%: all SAP tablespaces.

Update optimizer statistics at least once a week. Perform the updates during periods of low system activity.

Update optimizer statistics updates the table statistics.

To refresh the statistics of all tables with the TODO flag set in table DBSTATC, choose the keyword DBSTATCO: all tables marked in DBSTATC.

Monitoring Oracle Optimizer Statistics

*******************************

Use the following R/3 database monitors to check the state of the statistics.

To display an overview of the analysis dates, use the Database Tables and Indexes Monitor (transaction DB02) and choose Checks → Dates of table analysis. To display detailed information about the table statistics for tables contained in the control table DBSTATC, choose All tables.

To display the logs of the SAPDBA actions performed to refresh the optimizer statistics, use the DBA Logging Monitor (transaction DB24) and choose DB Optimizer.

To display either the SAPDBA check action logs (function ID opt) or the SAPDBA analyze action logs (function ID aly), choose Function IDs.

Check the return code of the actions performed. Actions that did not end successfully are marked either yellow (warning) or red (errors). To display the details of a SAPDBA log, double-click a SAPDBA action.

Periodic Monitoring: Next Extent Problems

*********************************

To avoid problems with the next extent size, SAPDBA has an automatic extent adjustment (-next). Use the DBA Planning Calendar (transaction DB13) to schedule this adjustment to run at least once a week for critical tablespaces that have high data growth.

To run the SAPDBA extent adjustment from the command prompt, enter: sapdba -next PSAP%.

The results of sapdba -next are written to directory sapcheck in file

To adjust the storage settings for single objects, use SAPDBA, and select d - Reorganization. The value for the NEXT extent should be at least 10% of an object's current size.

To display a list of tables and indexes that have allocated more than 1 extent in the last 4 weeks, use the Tables and Indexes Monitor (transaction DB02) and choose Check → Check next extent size. Look for objects that have allocated too many extents or are nearing the MAXEXTENT limit.

The R/3 Database System Check Monitor (transaction DB16) displays information about objects that exceed a threshold of extents (default 80) or are nearing the MAXEXTENT limit. You can also look at the Database Alert Monitor (transaction RZ20).

The INITIAL EXTENT should be large enough for the expected table or index size. If the extent of a data object becomes full during an insert or update operation, the Oracle storage management system attempts to allocate another extent of the size NEXT extent in the tablespace. An object can allocate extents up to the limit MAXEXTENT (default 300).

If the maximum number of extents per object is reached, the error message ORA-1631 (for a table) or ORA-1632 (for an index) is displayed. If this occurs, increase the parameter MAXEXTENT and check the size of the next extent.

Periodic Monitoring: Freespace Problems

********************************

Use the Tables and Indexes Monitor (transaction DB02) to:

Analyze the tablespaces that are marked as having critical freespace problems

Check the rate of the fill level to determine when a tablespace reaches a critical fill level

Check the Database System Check Monitor (transaction DB16) for freespace problem alerts. Potential bottlenecks in the freespace reserve of a tablespace are displayed in the log of command sapdba -check.

Extend critical tablespaces early. To do this, use the SAPDBA to either add a data file to a tablespace or resize the tablespace. (As of Release 4.5, resize is supported in SAPDBA.)

If you add a data file to extend a tablespace, ensure that the size of the new data file is large enough. If necessary, add a new hard disk and create a sapdata

Check the maximum number of data files allowed in your database. This number is defined in the init

After the structure of the data files have been changed, back up the database, or at least the newly added data file and the control files.

The step-by-step allocation of extents ensures that objects only allocate space when they need it. If there is not enough contiguous freespace to allocate a new extent, error message ORA-1653 (for a table) or ORA-1654 (for an index) is displayed.

Database System Check: sapdba -check

********************************

Use SAPDBA to check the following:

Table and index fragmentation

Tablespace filling

Physical consistency of the database (control files, redo log files, and data files)

Severe error messages in the alert log

init

Database problems specific to the R/3 environment

Schedule SAPDBA to run once a week, using either the DBA Planning Calendar (transaction DB13) or enter the sapdba -check command at the OS level.

Command sapdba -check generates a log file called

Periodic Monitoring: Archive Directory

***********************************

If an archiver stuck situation occurs:

The database system cannot write to the online redo log files

The database cannot perform any modifications to the database data

SAPDBA cannot connect to the database

If the archiver becomes stuck, monitor the database error logs. The archiver can become stuck if:

The database is in archive mode, but the Oracle archiver is not running. If this occurs, SAPDBA displays an error message. Use SAPDBA to start the Oracle archiver.

The archive directory overflows, due to a large amount of data manipulation (such as from upgrades or batch input). If this occurs, free up space in the archive directory.

To make space available in the archive directory, back up the offline redo log files to tape, using SAPDBA or the brarchive -cds. Before you delete offline redo log files, make 2 backups of each file.

If extra space is available, create a temporary (dummy) file in the saparch directory. In the case of an archiver stuck situation, these files can be deleted to free up some space.

Periodic Monitoring: Database Backups

***********************************

The DBA Planning Calendar (transaction DB13) displays the status of all the activities that were performed.

The DB Operations Monitor (transaction DB24) displays information about:

All the backup actions

The status of database and the offline redo log file backups

The log files written by BRBACKUP and BRARCHIVE

To prevent database errors from occurring, perform the following monitoring and maintenance tasks on your Oracle database:

Monitor the state of the optimizer statistics

Update the optimizer statistics

Monitor the database alert log

Check the tablespace fill levels and increase their size when necessary

Monitor the table and index extent growth

Monitor the offline redo log activity

-

Wednesday, April 2, 2008

Database Backups

Each database management system stores data in suitable structures on disk and writes data modifications to the database log. For the Oracle RDBMS:

The data is stored in the data files of the tablespaces.

The log information is recorded in the currently active online redo log file.

Administration data is stored in the parameter and control files.

The data files, the online redo log files, profiles, and a control file are backed up during a database backup.

When the currently active online redo log file is full, Oracle automatically writes to the next online redo log file. The Oracle Archiver copies the completed online redo log file to the archive directory. The copy is called the offline redo log file. The offline redo log files are backed up during an offline redo log file

backup.

In the event of data loss, you need to recover the database to a point in time as close as possible to the moment of loss. To ensure that you can do this, you need both database and offline redo log file backups.

To perform a restore, use a backup of the data files (or a part of the backup) for the starting point.

The log information that was generated during and after the database backup is used to recover all modifications that were made to the data. Usually, this log information is retrieved from the offline redo log file backups.

Offline Backups

When an offline backup is performed, the database is shut down and remains unavailable while the backup is running. The work processes receive a return code of class "reconnect" from the database.

Set the R/3 parameters:

rsdb/reco_trials = n, where n = no. of times the work processes attempt to connect to the database.

rsdb/reco_sleep_time = m, where m = no. of seconds the work processes sleeps between attempts.

Although the R/3 System is set up to remain online throughout the backup, the R/3 System is not available to users. The R/3 System remains online to preserve the R/3 buffer contents, for performance reasons.

Because the Oracle data files remain unchanged, a full offline backup is Consistent.

The following files are backed up when an offline backup is performed:

The data files of all tablespaces belonging to the database

The online redo log files

The control file

The profiles init

When the offline backup is finished, you must back up the offline redo log files.

Online Backups

During an online backup, the Oracle database and the R/3 System remain available. Online backups cause a small reduction in system performance.

The following files are backed up when an online backup is performed:

The data files of all tablespaces belonging to the Oracle database

The control file

The profiles init

Caution: Because the data files are updated concurrently, online backups are not consistent. An online backup can only be consistent and usable in conjunction with the log information written during the online backup.

After an online backup is finished, the SAP tool BRBACKUP switches to a new online redo log file and the Oracle archiver copies the previously active online redo log file to the directory saparch. The entire log information generated during the online backup is contained in the offline redo log files.

Back up the offline redo log files when the online backup has finished.

When you perform an online backup, you do not need to back up the online redo log files.

Offline Redo Log File Backups

Offline redo log files are copies of online redo log files which have been saved by the Oracle archiver to directory saparch. Online redo log files are cyclically overwritten.

Log information is constantly generated when database data is modified. Therefore, disk space in the archive directory must be continuously released for new log files. When the archiver is unable to write to the directory specified by the parameter log_archive_dest, Oracle reports error 257: Archiver is stuck or

error 255: Error archiving log, and the database becomes stuck. An error message is then written to the Oracle trace files.

In the case of a restore, if any of the required offline redo log files do not have a valid backup, data loss occurs. You must back up the the offline redo log files before you delete them from the archive directory. For security reasons, back up 2 copies of each offline redo log file on separate tapes.

If there are database changes that are not contained in the latest redo log file backup, then, in the case of a database failure, you may be unable to recover all the changes.

An online backup is useless without the log information that was generated during the database backup.

SAP BACKUP TOOLS

Database backups are performed by the SAP tool BRBACKUP.

Offline redo log file backups are performed by the SAP tool BRARCHIVE.

There are three ways to call these tools:

Using the DBA Planning Calendar (transaction DB13)

Using the SAP database administration tool SAPDBA at operating system level

Directly, at the operating system level

SAP recommends the following procedure:

Schedule all regular backups with the DBA Planning Calendar (transaction DB13)

Perform additional backups with SAPDBA

All the steps of a backup are logged to operating system files and in database tables.

Backup Profile Parameters for Tape Initialization

The profile init

tape_use_count defines how often a tape can be reused.

expir_period defines the length of the backup cycle. To allow the tape to be reused after 28 days, set this parameter to 28.

backup_dev_type defines the type of backup device used. To perform backups to a local tape device,enter tape. Other options include pipe (remote), tape_auto (auto loader), util_file (backint interface),and disk.

volume_backup defines the tape pool for the database backups

volume_archive defines the tape pool for the offline redo log file backups

Profile Parameters for Database Backup

Use the following init

Set parameter backup_mode to all in order to perform a complete database backup

backup_type defines whether the backup is online or offline

tape_size defines the physical tape size for tapes used by BRBACKUP. Specify the size of the smallest tape in your tape pool (in MB). For example, specify 32000M for a 70 GB tape. The valuespecified for this parameter must be equal to the amount of uncompressed data the tape can hold.

When data is backed up sequentially to several tapes and only one tape device is available, BRBACKUP requests the tapes in the order defined by the parameter volume_backup.

Both rewind and no-rewind tape drivers are required by the SAP backup tools. A no-rewind driver must be assigned to parameter tape_address, and a rewind driver to parameter tape_address_rew.

Profile Parameters for Offline Redo Log Backup

Use the following init

Set parameter archive_function to - cds (copy_delete_save). This ensures that two copies of one offline redo log file are backed up to different tapes.

Option -cds (copy_delete_save):

- Creates a second backup of the oldest offline redo log files already backed up once

- Deletes the offline redo log files that have been backed up twice from the archive directory, in order to free disk space

- Backs up all new offline redo log files for the first time

tape_size_arch defines the physical tape size for tapes used by BRARCHIVE. Specify the size of the smallest tape in your tape pool (in MB). For example, specify 6000M for a 6 GB tape.

tape_address_arch and tape_address_rew_arch specify the drivers of the tape devices to be used by BRARCHIVE to perform the offline redo log file backup. If they are not defined, BRARCHIVE uses parameters tape_address and tape_address_rew to determine the drivers.

REFERENCE : BC505 Oracle Database Administration

Database Administration

When an Oracle database instance is started, two processes are created, the listener process and the Oracle process. Oracle on the Windows NT operation system uses the Windows NT implementation of threads. The Oracle process consists of the shared threads and the dedicated shadow threads.

Database data is stored in 8 KB blocks in data files on disk. To accelerate read and write access to data, these data blocks are cached in the database buffer pool in main memory.

Modifications to database data are logged in the online redo log files. This procedure ensures data security. To ensure fail-safe database operation, without using additional operating system utilities, the control files and the online redo log files of the database system should be mirrored.

The Oracle database management system holds the executable SQL statements in the Shared SQL Area,which is part of the shared pool.

Each R/3 work process: Connects to the database as one database user, SAPR3

Handles database requests for the different R/3 System users

Communicates with a corresponding shadow thread on the database

Can reconnect to the database if the connection is lost due to database errors

Starting and Stopping Database

****************************

When an Oracle database is started, it goes through 3 phases:

In the No mount phase, the database instance is built up. Operating system resources are allocated using configuration information stored in the profile init

In the Mount phase, the control files of the database are evaluated. The system reads the information about the file structure of the database. Data files and logs are not yet opened.

In the Open phase, all files in the database system are opened. If required, an instance recovery is performed immediately after opening the database. Pending database transactions are ended.

You can shut down the database using one of three commands:

SHUTDOWN NORMAL: No new database logon possible. After all database user have logged off,the database is closed systematically: All files are closed, the database is dismounted, and the instance is shut down. The database is consistent after shutdown.

SHUTDOWN IMMEDIATE: Only the current commands are executed. PMON ends all sessions and performs a rollback of the open transactions. The database is then closed systematically (as for a normal shutdown). The database is consistent after shutdown.

Caution: DBWR and ARCH may require up to 1 hour post-processing time.

SHUTDOWN ABORT: Emergency database shutdown. Users are not logged off, and a rollback of the open transactions is not performed. The database is not consistent after shutdown. An instance recovery is automatically performed at the next database startup.

An Oracle database system has three threads that write information from the Shared Global Area (SGA) to the appropriate files:

During a checkpoint, the Database writer (DBWR) asynchronously writes the changed blocks from the SGA to the database data files.

To speed up the writing of checkpoints, the Checkpoint (CKPT) thread starts.

The Logwriter (LGWR) synchronously writes the change log from the SGA redo log buffer to the currently active online redo log file.

In a production database system, you must always run the database in ARCHIVELOG mode and ensure that the Archiver thread (ARCH) is started (init

ARCH determines the archive directory from the init

Once the offline redo log file has been successfully created, the corresponding online redo log file is released to be overwritten with new log information.

If there is no freespace available in the archive directory, or if a file with the same name already exists in the archive directory, the Archiver does not archive the file. After a corresponding number of redo log switches, the database becomes "stuck". Database changes cannot be committed as long as this archiver stuck situation persists.

A database is divided into logical storage units called tablespaces. Tablespaces are divided into logical units of storage called segments (tables or indexes). Segments are further divided into extents, which consist of contiguous data blocks. A data block (normally 8 KB) is the smallest unit of I/O used by a database.

A tablespace in an Oracle database consists of one or more physical data files. A data file can be associated with only one tablespace. You can increase a tablespace in two ways:

1) Add a data file to a tablespace. When you add another data file to an existing tablespace, you increase the amount of disk space allocated to the corresponding tablespace.

2) Increase the size of a data file.

Storage parameters such as INITIAL, NEXT, and MAXEXTENTS allow you to manage space allocated to a table. In the next unit, we discuss how to manage storage with these storage parameters.

The R/3 naming convention for tablespace names is defined as follows:

PSAP< tablespace_name > < extension >.

The abbreviations in the tablespace name are part of the directory name and file name of each data file. Directories and data files are numbered.

The objects located in the tablespaces SYSTEM, PSAPROLL, and PSAPTEMP belong either to the Oracle database users SYS or SYSTEM. Do not create any objects owned by other users in these tablespaces.

The objects located in the other tablespaces belong to the SAP R/3 database user SAPR3. R/3 System users do not have a database system user.

The R/3 System and SAP tools, such as SAPDBA, require that the naming conventions be observed. The installed system constitutes a logical unit, which you should not change. In this way, SAP can ensure that you receive fast and efficient support.

ORACLE DIRECTORY STRUCTURE

*****************************

Directory and file names are standardized in the R/3 environment. We recommend that you use the following standards:

Tablespace files reside in the sapdata

The online redo log files reside in the origlog and mirrlog directories.

The offline redo log files are written to the saparch directory.

There should be at least 3 copies of the Oracle control file.

The profile init

The profile init

The profile init

The Oracle alert file is written to directory saptrace\background.

Trace files of the Oracle shadow processes are written to the directory saptrace\usertrace

During reorganization, export datasets are written to directory sapreorg.

The directories saparch, sapcheck, sapreorg, and sapbackup are used by the SAP database tools.